apologize!

games = windows, still ?

(although there are 6000 steam games running on linux)

the very first “Open Source GPU” is in the making: https://www.crowdsupply.com/libre-risc-v/m-class

“The Libre RISC-V M-Class is a RISC-V chip that is libre-licensed to the bedrock.

“The Libre RISC-V M-Class is a RISC-V chip that is libre-licensed to the bedrock.

It is a low-power, mobile-class, 64-bit Quad-Core SoC at a minimum 800mhz clock rate, suitable for tablet, netbook, and industrial embedded systems.

Full source code and files are available not only for the operating system and bootloader, but also for the processor, its peripherals and its 3D GPU and VPU.”

… so “Kazan” will be aiming at the mobile market, not necessarily the Desktop / Workstation “give me 100 fps on this latest game” market. Hope they can do it never the less and scale from there.

more on open source GPUs: http://nyuzi.org

“Nyuzi is an experimental GPGPU processor hardware design focused on compute intensive tasks. It is optimized for use cases like deep learning and image processing.” (src: GitHub.com)

that’s probably a clever thing to do: simulate the hardware in software and then – later build it.

http://www.cs.binghamton.edu/~millerti/nyami-ispass2015.pdf

(as seen on: nextplatform.com)

games and AI: all need GPUs

the stuck userbenchmark:

one probably knows: https://www.userbenchmark.com/

a fast running benchmark, that feeds a publicly accessible website and it’s database, that let’s one compare one’s system against all the other tested systems out there.

if one get’s userbenchmark: “internet connectivity failed”

before any benchmarking starts

this is another pretty useless error message, because it does not point one to the correct problem.

- test internet: ping yahoo.de works perfectly fine

- it might be because the CMOS clock is not set to current date and thus userbenchmark can not establish a trusted encrypted connection with a certificate that is only valid in the future.

- solution:

- manually set the CMOS PC Clock in BIOS to current date (usually right after boot DEL or F2 will get one into BIOS)

- or let the OS sync with: 0.de.pool.ntp.org (195.201.19.162)

https://www.userbenchmark.com/page/guide#benchmark

test results for this PC:

it is official: so the once pretty nice XFX Nvidia GeFORCE 8800 GTS

is almost 3x slower while consuming 40Watts more than…

https://www.userbenchmark.com/UserRun/23197079

…the way more compact (and more recent, but still old in 2019) GTX 550-Ti

https://www.userbenchmark.com/UserRun/23194993

2020-01: upgrades for the gamingpc!

+ salvaged 128 GB SSD from old Macbook (was swapped for bigger one)

+ swapped GPU for salvaged GTX 1050 + 200% GPU performance 🙂 it is still rated “Surfboard” for Gaming, but now “JetSki” für Desktop X-D

Energy usage: 0.3 W when off, 120 W during boot, 65 W idle, 150 W 100% CPU load, 180 W during GPU bench “catzilla”

https://www.userbenchmark.com/UserRun/23675431

second run:

https://www.userbenchmark.com/UserRun/23675746

13001 on second run… fine with that 🙂

The whole PC draws: 65W in IDLE! (checkout this embedded super fast workstation uses around 20Watts!.))

CatZilla is a pretty nice (500MByte, Windows only ?) GPU / 3D Benchmark from Poland!

here are their GPU toplist: https://www.catzilla.com/topbenchmarks/best-of/best-gpu

currently leading the charts is the “NVIDIA TITAN RTX” (which costs 1-3000€, has around 12GByte of RAM and uses 200-300Watts!)

nowadays “best buy” would be: GTX 1060

or: AMD RX 580

(both roughly 150Bucks)

GPUs are energy hungry… and used for AI?

GPU vs CPU computing

Below we only a provide very brief overview over these two computational modes. More details can be found in many online tutorials, for instance here

- Training of large neural networks is frequently performed on so-called GPUs (Graphics processing unit). These are specialised devices, which are ideally suited for training and prediction in deep learning applications. Many of the open-source libraries require GPUs of Nvidia. Such a device will not be available on every computer. Libraries might further depend on which specific GPU will be used.

- As an alternative computations can be performed on the CPU (central processing units), which is the general purpose processing unit of every computer. Here, training is often slow and in extreme cases not even possible. However, frequently trained networks can be be used with a CPU. While every computer will have a CPU, libraries will depend on the precise hardware specification and also the operating system.

- Installations can depend on the operating systems, e.g. to account for differences between Windows and Linux. This could be accounted for by operating system specific tags, such as

CPU_Windowsfor a plugin that will be using CPUs on Windows.

src: https://github.com/imjoy-team/example-plugins

Most energy efficient GPU in 2019?

byu/JasonTheHasher ingpumining

actually NVIDIA deserves a boycott for being not very Open Source friendly, if one is concerned about power consumption during gaming, “NVIDIA Touring” is said to be the most power efficient, while still consuming 200Watts! (src) (around 200 Bucks for GeForce RTX 2060)

https://en.wikipedia.org/wiki/GeForce_20_series

New features in Turing:

- CUDA Compute Capability 7.5

- Mesh shaders[10]

- Ray tracing (RT) cores – bounding volume hierarchy acceleration[11]

- Tensor (AI) cores – neural net artificial intelligence – large matrix operations

- New memory controller with GDDR6 support

- DisplayPort 1.4a with Display Stream Compression (DSC) 1.2

- PureVideo Feature Set J hardware video decoding

- GPU Boost 4

- NVLink Bridge

- VirtualLink VR

- Improved NVENC codec

- Dedicated Integer (INT) cores for concurrent execution of integer and floating point operations[12]

When AI fails: Peter Haas, TEDxDirigo

What happens, when humans trust AI too much: The researches had no idea… that was happening, why the AI mistook a dog for a wolf.

It has to used properly… but nobody may see the source code and check it for for problems.

“black box AI algorithms drive trucks, cars and decide if you can get a loan.”

“we have to implement checks and balances”

Tensor Cores and KI:

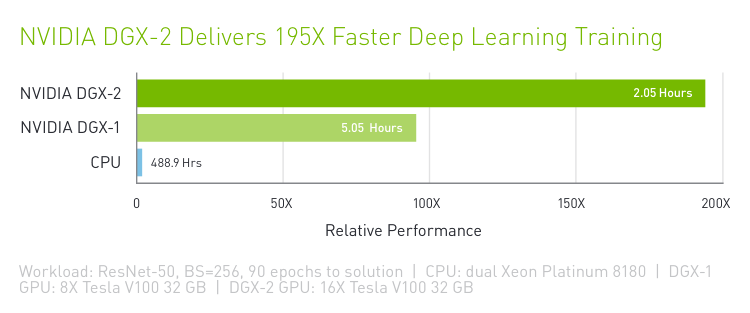

The successor of the Nvidia DGX-1 is the Nvidia DGX-2, which uses 16 32GB V100 (second generation) cards in a single unit. This increases performance of up to 2 Petaflops with 512GB of shared memory for tackling larger problems and uses NVSwitch to speed up internal communication.

https://en.wikipedia.org/wiki/Nvidia_DGX

The tensor processing unit was announced in May 2016 at Google I/O, when the company said that the TPU had already been used inside their data centers for over a year.[1][2] The chip has been specifically designed for Google’s TensorFlow framework, a symbolic math library which is used for machine learning applications such as neural networks.[3] However, Google still uses CPUs and GPUs for other types of machine learning.[1] Other AI accelerator designs are appearing from other vendors also and are aimed at embedded and robotics markets. (src)

https://devblogs.nvidia.com/programming-tensor-cores-cuda-9/

is OpenAI using Tensor Flow GPUs?

more detailed:

What is Deep Learning used for?

Programs learned to play games better than humans (but then still is the question, how much informations is given to the AI… does it “see” all the enemies through walls?

Because right now AI’s even have problems distinguishing between pictures of cars and buses and bicycles… but they are getting better at it, it is crucial for self driving cars.

Google applies neural networks to translate language.

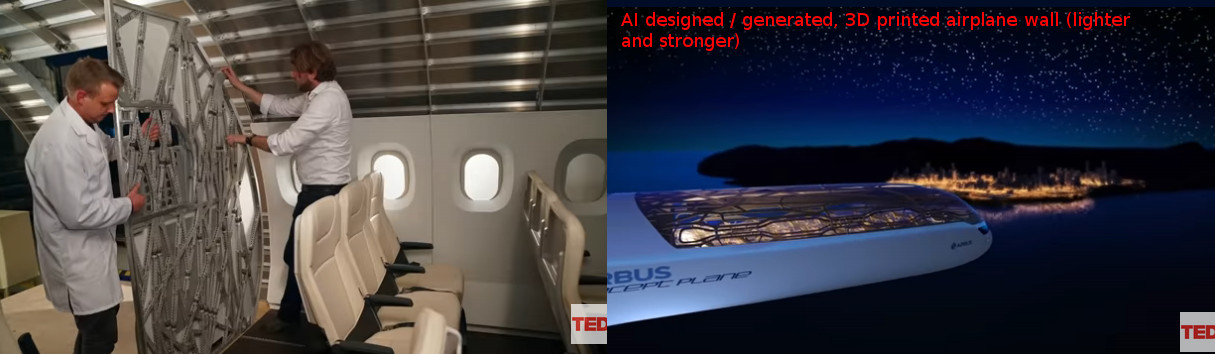

Some companies apply deep learning to engendering and design problems, like “what would the best wall for an airplane look like”

“Artificial intelligence (AI) is much more than a research field: it is a ubiquitous future technology with the potential to redefine all areas of our society. At Airbus, we believe AI is a key competitive advantage that enables us to capitalise on the value of our data.”

https://www.airbus.com/innovation/future-technology/artificial-intelligence.html

“You probably heard the silly news that Facebook turned off its chatbot, which went out of control and made up its own language. …

For training, they collected a dataset of human negotiations and trained a supervised recurrent network. Then, they took a reinforcement learning trained agent and trained it to talk with itself, setting a limit — the similarity of the language to human.”

src: https://blog.statsbot.co/deep-learning-achievements-4c563e034257

Links:

https://nerdpol.ch/tags/deeplearning

https://towardsdatascience.com/understanding-and-coding-a-resnet-in-keras-446d7ff84d33

sources:

https://github.com/davidcpage/cifar10-fast

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!